#Generative AI For Operational Efficiency

Explore tagged Tumblr posts

Text

Generative AI: Transforming manufacturing with predictive maintenance, innovative designs, superior quality control, and efficient supply chains. Drive innovation in your industry!

#AI-Enhanced Manufacturing Solutions#Generative AI For Factory Efficiency#AI In Supply Chain Analytics#AI-Driven Industrial Efficiency#Generative AI For Manufacturing Intelligence#AI In Production Workflows#AI-Powered Manufacturing Transformation#Generative AI For Operational Efficiency#AI In Manufacturing Cost Management#AI-Driven Factory Processes#Generative AI For Industrial Productivity#AI In Production Forecasting#AI-Powered Manufacturing Automation#Generative AI For Quality Manufacturing#AI In Operational Innovation#AI-Driven Manufacturing Analytics

0 notes

Text

#AI Factory#AI Cost Optimize#Responsible AI#AI Security#AI in Security#AI Integration Services#AI Proof of Concept#AI Pilot Deployment#AI Production Solutions#AI Innovation Services#AI Implementation Strategy#AI Workflow Automation#AI Operational Efficiency#AI Business Growth Solutions#AI Compliance Services#AI Governance Tools#Ethical AI Implementation#AI Risk Management#AI Regulatory Compliance#AI Model Security#AI Data Privacy#AI Threat Detection#AI Vulnerability Assessment#AI proof of concept tools#End-to-end AI use case platform#AI solution architecture platform#AI POC for medical imaging#AI POC for demand forecasting#Generative AI in product design#AI in construction safety monitoring

0 notes

Text

#ERP solution for contractors#Contractor management software#Construction ERP software#All-in-one ERP for contractors#Best ERP for construction industry#Project management ERP for contractors#Contractor operations software#ERP for general contractors#ERP for specialty contractors#Streamlining contractor operations#Best ERP software for construction contractors#ERP for managing contractor bids and projects#Cloud-based ERP for construction industry#How to streamline contractor operations with ERP#ERP solution for efficient project management#Contractor job tracking software#Financial management ERP for contractors#AI-powered ERP for contractors#Real-time project tracking ERP#Contractor workforce management ERP

1 note

·

View note

Text

Generative AI for Startups: 5 Essential Boosts to Boost Your Business

The future of business growth lies in the ability to innovate rapidly, deliver personalized customer experiences, and operate efficiently. Generative AI is at the forefront of this transformation, offering startups unparalleled opportunities for growth in 2024.

Generative AI is a game-changer for startups, significantly accelerating product development by quickly generating prototypes and innovative ideas. This enables startups to innovate faster, stay ahead of the competition, and bring new products to market more efficiently. The technology also allows for a high level of customization, helping startups create highly personalized products and solutions that meet specific customer needs. This enhances customer satisfaction and loyalty, giving startups a competitive edge in their respective industries.

By automating repetitive tasks and optimizing workflows, Generative AI improves operational efficiency, saving time and resources while minimizing human errors. This allows startups to focus on strategic initiatives that drive growth and profitability. Additionally, Generative AI’s ability to analyze large datasets provides startups with valuable insights for data-driven decision-making, ensuring that their actions are informed and impactful. This data-driven approach enhances marketing strategies, making them more effective and personalized.

Intelisync offers comprehensive AI/ML services that support startups in leveraging Generative AI for growth and innovation. With Intelisync’s expertise, startups can enhance product development, improve operational efficiency, and develop effective marketing strategies. Transform your business with the power of Generative AI—Contact Intelisync today and unlock your Learn more...

#5 Powerful Ways Generative AI Boosts Your Startup#advanced AI tools support startups#Driving Innovation and Growth#Enhancing Customer Experience#Forecasting Data Analysis and Decision-Making#Generative AI#Generative AI improves operational efficiency#How can a startup get started with Generative AI?#Is Generative AI suitable for all types of startups?#marketing strategies for startups#Streamlining Operations#Strengthen Product Development#Transform your business with AI-driven innovation#What is Generative AI#Customized AI Solutions#AI Development Services#Custom Generative AI Model Development.

0 notes

Text

#Generative AI#Japan#efficiency#responsible governance#adoption#major companies#data breaches#authenticity#survey#implementation#operational efficiency#text analysis#drafting#chatbots#security#information leaks#false data#copyright issues#personal information#governance#technology impact#tokyo#investment

1 note

·

View note

Text

A summary of the Chinese AI situation, for the uninitiated.

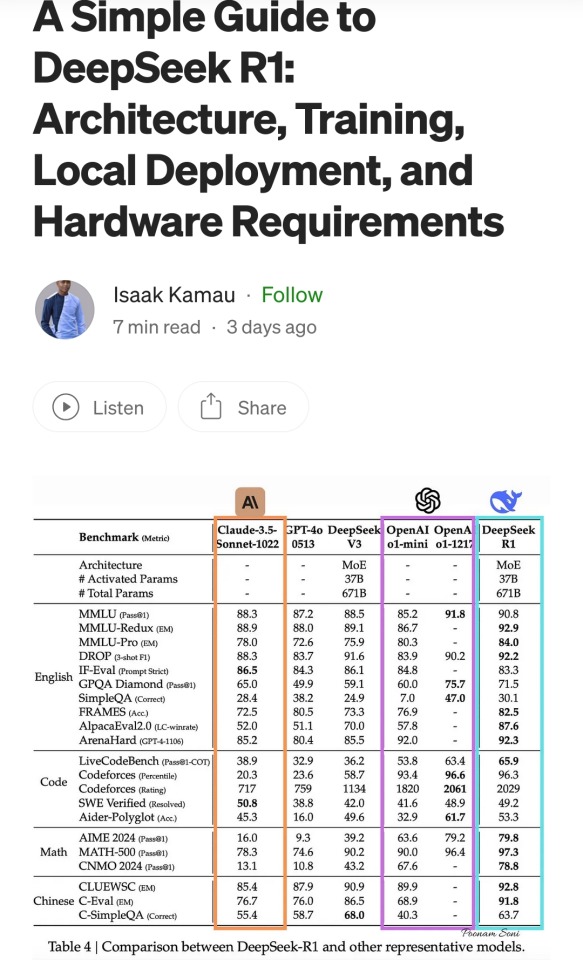

These are scores on different tests that are designed to see how accurate a Large Language Model is in different areas of knowledge. As you know, OpenAI is partners with Microsoft, so these are the scores for ChatGPT and Copilot. DeepSeek is the Chinese model that got released a week ago. The rest are open source models, which means everyone is free to use them as they please, including the average Tumblr user. You can run them from the servers of the companies that made them for a subscription, or you can download them to install locally on your own computer. However, the computer requirements so far are so high that only a few people currently have the machines at home required to run it.

Yes, this is why AI uses so much electricity. As with any technology, the early models are highly inefficient. Think how a Ford T needed a long chimney to get rid of a ton of black smoke, which was unused petrol. Over the next hundred years combustion engines have become much more efficient, but they still waste a lot of energy, which is why we need to move towards renewable electricity and sustainable battery technology. But that's a topic for another day.

As you can see from the scores, are around the same accuracy. These tests are in constant evolution as well: as soon as they start becoming obsolete, new ones are released to adjust for a more complicated benchmark. The new models are trained using different machine learning techniques, and in theory, the goal is to make them faster and more efficient so they can operate with less power, much like modern cars use way less energy and produce far less pollution than the Ford T.

However, computing power requirements kept scaling up, so you're either tied to the subscription or forced to pay for a latest gen PC, which is why NVIDIA, AMD, Intel and all the other chip companies were investing hard on much more powerful GPUs and NPUs. For now all we need to know about those is that they're expensive, use a lot of electricity, and are required to operate the bots at superhuman speed (literally, all those clickbait posts about how AI was secretly 150 Indian men in a trenchcoat were nonsense).

Because the chip companies have been working hard on making big, bulky, powerful chips with massive fans that are up to the task, their stock value was skyrocketing, and because of that, everyone started to use AI as a marketing trend. See, marketing people are not smart, and they don't understand computers. Furthermore, marketing people think you're stupid, and because of their biased frame of reference, they think you're two snores short of brain-dead. The entire point of their existence is to turn tall tales into capital. So they don't know or care about what AI is or what it's useful for. They just saw Number Go Up for the AI companies and decided "AI is a magic cow we can milk forever". Sometimes it's not even AI, they just use old software and rebrand it, much like convection ovens became air fryers.

Well, now we're up to date. So what did DepSeek release that did a 9/11 on NVIDIA stock prices and popped the AI bubble?

Oh, I would not want to be an OpenAI investor right now either. A token is basically one Unicode character (it's more complicated than that but you can google that on your own time). That cost means you could input the entire works of Stephen King for under a dollar. Yes, including electricity costs. DeepSeek has jumped from a Ford T to a Subaru in terms of pollution and water use.

The issue here is not only input cost, though; all that data needs to be available live, in the RAM; this is why you need powerful, expensive chips in order to-

Holy shit.

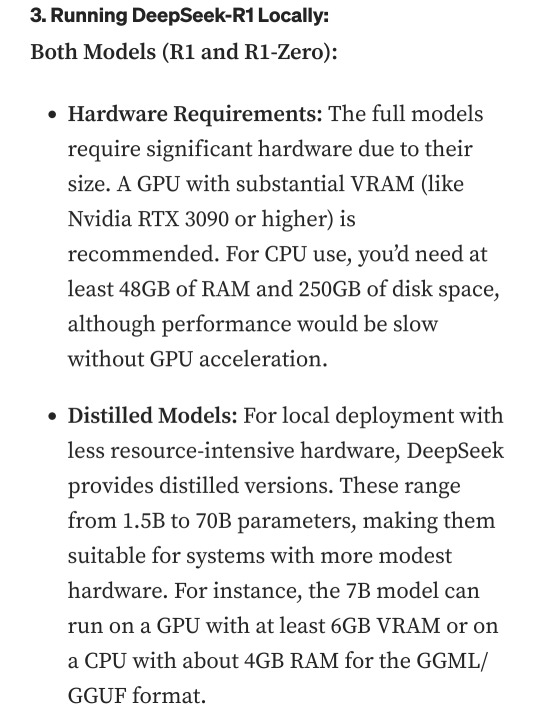

I'm not going to detail all the numbers but I'm going to focus on the chip required: an RTX 3090. This is a gaming GPU that came out as the top of the line, the stuff South Korean LoL players buy…

Or they did, in September 2020. We're currently two generations ahead, on the RTX 5090.

What this is telling all those people who just sold their high-end gaming rig to be able to afford a machine that can run the latest ChatGPT locally, is that the person who bought it from them can run something basically just as powerful on their old one.

Which means that all those GPUs and NPUs that are being made, and all those deals Microsoft signed to have control of the AI market, have just lost a lot of their pulling power.

Well, I mean, the ChatGPT subscription is 20 bucks a month, surely the Chinese are charging a fortune for-

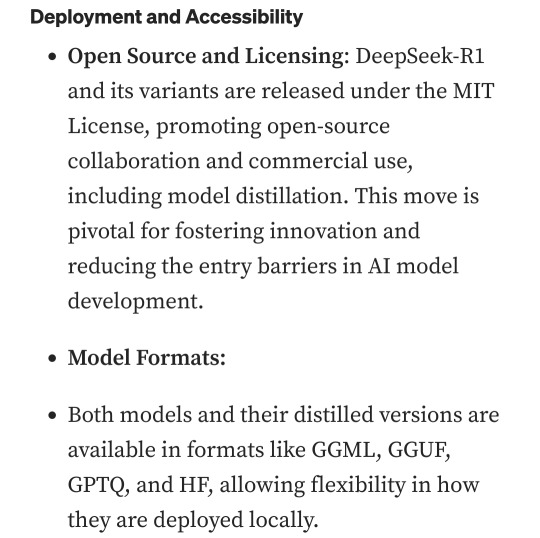

Oh. So it's free for everyone and you can use it or modify it however you want, no subscription, no unpayable electric bill, no handing Microsoft all of your private data, you can just run it on a relatively inexpensive PC. You could probably even run it on a phone in a couple years.

Oh, if only China had massive phone manufacturers that have a foot in the market everywhere except the US because the president had a tantrum eight years ago.

So… yeah, China just destabilised the global economy with a torrent file.

#valid ai criticism#ai#llms#DeepSeek#ai bubble#ChatGPT#google gemini#claude ai#this is gonna be the dotcom bubble again#hope you don't have stock on anything tech related#computer literacy#tech literacy

433 notes

·

View notes

Text

The latest, AI-dedicated server racks contain 72 specialised chips from manufacturer Nvidia. The largest “hyperscale” data centres, used for AI tasks, would have about 5,000 of these racks. And as anyone using a laptop for any period of time knows, even a single chip warms up in operation. To cool the servers requires water – gallons of it. Put all this together, and a single hyperscale data centre will typically need as much water as a town of 30,000 people – and the equivalent amount of electricity. The Financial Times reports that Microsoft is currently opening one of these behemoths somewhere in the world every three days. Even so, for years, the explosive growth of the digital economy had surprisingly little impact on global energy demand and carbon emissions. Efficiency gains in data centres—the backbone of the internet—kept electricity consumption in check. But the rise of generative AI, turbocharged by the launch of ChatGPT in late 2022, has shattered that equilibrium. AI elevates the demand for data and processing power into the stratosphere. The latest version of OpenAI’s flagship GPT model, GPT-4, is built on 1.3 trillion parameters, with each parameter describing the strength of a connection between different pathways in the model’s software brain. The more novel data that can be pushed into the model for training, the better – so much data that one research paper estimated machine learning models will have used up all the data on the internet by 2028. Today, the insatiable demand for computing power is reshaping national energy systems. Figures from the International Monetary Fund show that data centres worldwide already consume as much electricity as entire countries like France or Germany. It forecasts that by 2030, the worldwide energy demand from data centres will be the same as India’s total electricity consumption.

30 May 2025

81 notes

·

View notes

Text

The Trump administration’s Federal Trade Commission has removed four years’ worth of business guidance blogs as of Tuesday morning, including important consumer protection information related to artificial intelligence and the agency’s landmark privacy lawsuits under former chair Lina Khan against companies like Amazon and Microsoft. More than 300 blogs were removed.

On the FTC’s website, the page hosting all of the agency’s business-related blogs and guidance no longer includes any information published during former president Joe Biden’s administration, current and former FTC employees, who spoke under anonymity for fear of retaliation, tell WIRED. These blogs contained advice from the FTC on how big tech companies could avoid violating consumer protection laws.

One now deleted blog, titled “Hey, Alexa! What are you doing with my data?” explains how, according to two FTC complaints, Amazon and its Ring security camera products allegedly leveraged sensitive consumer data to train the ecommerce giant’s algorithms. (Amazon disagreed with the FTC’s claims.) It also provided guidance for companies operating similar products and services. Another post titled “$20 million FTC settlement addresses Microsoft Xbox illegal collection of kids’ data: A game changer for COPPA compliance” instructs tech companies on how to abide by the Children’s Online Privacy Protection Act by using the 2023 Microsoft settlement as an example. The settlement followed allegations by the FTC that Microsoft obtained data from children using Xbox systems without the consent of their parents or guardians.

“In terms of the message to industry on what our compliance expectations were, which is in some ways the most important part of enforcement action, they are trying to just erase those from history,” a source familiar tells WIRED.

Another removed FTC blog titled “The Luring Test: AI and the engineering of consumer trust” outlines how businesses could avoid creating chatbots that violate the FTC Act’s rules against unfair or deceptive products. This blog won an award in 2023 for “excellent descriptions of artificial intelligence.”

The Trump administration has received broad support from the tech industry. Big tech companies like Amazon and Meta, as well as tech entrepreneurs like OpenAI CEO Sam Altman, all donated to Trump’s inauguration fund. Other Silicon Valley leaders, like Elon Musk and David Sacks, are officially advising the administration. Musk’s so-called Department of Government Efficiency (DOGE) employs technologists sourced from Musk’s tech companies. And already, federal agencies like the General Services Administration have started to roll out AI products like GSAi, a general-purpose government chatbot.

The FTC did not immediately respond to a request for comment from WIRED.

Removing blogs raises serious compliance concerns under the Federal Records Act and the Open Government Data Act, one former FTC official tells WIRED. During the Biden administration, FTC leadership would place “warning” labels above previous administrations’ public decisions it no longer agreed with, the source said, fearing that removal would violate the law.

Since President Donald Trump designated Andrew Ferguson to replace Khan as FTC chair in January, the Republican regulator has vowed to leverage his authority to go after big tech companies. Unlike Khan, however, Ferguson’s criticisms center around the Republican party’s long-standing allegations that social media platforms, like Facebook and Instagram, censor conservative speech online. Before being selected as chair, Ferguson told Trump that his vision for the agency also included rolling back Biden-era regulations on artificial intelligence and tougher merger standards, The New York Times reported in December.

In an interview with CNBC last week, Ferguson argued that content moderation could equate to an antitrust violation. “If companies are degrading their product quality by kicking people off because they hold particular views, that could be an indication that there's a competition problem,” he said.

Sources speaking with WIRED on Tuesday claimed that tech companies are the only groups who benefit from the removal of these blogs.

“They are talking a big game on censorship. But at the end of the day, the thing that really hits these companies’ bottom line is what data they can collect, how they can use that data, whether they can train their AI models on that data, and if this administration is planning to take the foot off the gas there while stepping up its work on censorship,” the source familiar alleges. “I think that's a change big tech would be very happy with.”

77 notes

·

View notes

Note

Hiii so I was reading your posts about how confusing the dreams are and I was trying to come up with an explanation of my own. What if both Malleus and the dreamers are subsconiously influencing the dreams and that's why no one really understands what's happening? Because we're not always aware of what's going on in that part of our minds.

[Referencing this post!]

That might be the case?? I really wish this was the lore we received from the beginning; it would have saved us a lot of trouble in the long run if the devs had chosen to go with a looser, more vague explanation of the dream worlds.

@/twistedminutia suggested in this post that the dreams may operate like an AI algorithm, which I thought was an interesting concept + is similar to what anon is pitching too. The idea is that Malleus isn't directly influencing the dreams or determining explicit details within them, but rather he has set a definition for what makes a person "happy" and his autonomous magic (ie the AI) is running off of that definition to determine what would be the most efficient path to "happiness". However, the end result tends to be shallow because of this. The commentor then proposes that Malleus might associate "happiness" with being in control, and because of that, it accidentally "colors" or influences the dreams of those touched by his magic. Thinking back on what we've witnessed so far... Malleus associating control with happiness might not be that far-fetched. Several of the dreams we've witnessed so far involve granting the dreamer a sense of control or outright places the dreamers in positions of power. Lilia is restored to his days as a war general, Leona is the unquestioned king of the savanna, Cater is Heartslabyul's dorm leader, Azul leads a Coral Rush team, Vil is Neige's boss, Jamil is student council president, etc. Malleus himself expresses being insecure when he lacks control over a given situation. In 7-29, he confides in Silver:

"There's something my Grandmother has often talked to me about. It's the reason why our family, with our draconic lineage, is so exceptionally powerful even among the nocturnal fae. She said it's to ensure that nothing ever diminishes the happiness of our people in Briar Valley. Yet here I am, incapable of dispelling the sorrows of father and son alike. What good does all this magic do me? ...I'm completely powerless."

Malleus has also previously acted in ways which suggest that he interacts with the world by projecting his own experiences onto others and relating to them that way. For example, he helps out the late ghosts in Endless Halloween Night because he feels a kinship with them as someone who also misses out on celebrations. In his own dorm uniform vignettes, Malleus thinks of what would be most convenient for him to attend dorm meetings and disregards how his classmates would feel at being summoned like objects. This makes sense, as he has a limited understanding of the world beyond his castle walls and of non-fae societies in general. Malleus only has his own experiences to go off of.

Thinking of it like that, it does make some semblance of sense. Malleus's subconscious desire for control might be trickling into the dreams and either influencing or overriding what the dreamers truly desire in their hearts. And while we're on this topic, maybe it also depends on the dreamer...? Like maybe the more emotionally vulnerable the dreamer is, the more of Malleus's subconscious impacts them? For example, Cater has demonstrated confusion over his identity and what he wishes to do for his internships. This lack of self could mean that Malleus's influence projected more strongly on Cater's dream in order to fill in all those cracks, thus resulting in a dream that is very far away from, even the opposite of, what Cater wants. Azul and Vil have had histories where they were judged and rejected by their peers. Leona and Jamil have their "second place syndromes". And Lilia has to deal with the inevitability of aging and leaving behind his loved ones for a foreign land...

But hey, that's just a theory ^^ A gaaaaaame theory--

#twst#twisted wonderland#disney twisted wonderland#disney twst#Malleus Draconia#Silver#book 7 spoilers#notes from the writing raven#question#Lilia Vanrouge#Leona Kingscholar#Vil Schoenheit#Neige LeBlanche#Cater Diamond#Jamil Viper#Azul Ashengrotto#twst theory#twst theories#twisted wonderland theories#twisted wonderland theory#endless halloween night spoilers#Malleus dorm uniform vignette spoilers

79 notes

·

View notes

Text

Discover how generative AI solves manufacturing challenges: predictive maintenance, optimized design, quality control, and supply chain efficiency. Innovate your production today!

#AI-Driven Production Enhancements#Generative AI For Process Automation#AI In Manufacturing Intelligence#Generative AI For Manufacturing Improvement#AI In Industrial Efficiency#AI-Enhanced Manufacturing Workflows#Generative AI For Operational Excellence#AI In Production Management#AI-Driven Manufacturing Optimization#Generative AI For Supply Chain Resilience#AI In Process Innovation#AI In Manufacturing Performance#Generative AI For Manufacturing Analytics#AI In Production Quality#AI-Powered Factory Efficiency#Generative AI For Cost-Effective Manufacturing

0 notes

Note

Hai, I saw ur post on generative AI and couldn’t agree more. Ty for sharing ur knowledge!!!!

Seeing ur background in CS,,, I wanna ask how do u think V1 and other machines operate? My HC is that they have a main CPU that does like OS management and stuff, some human brain chunks (grown or extracted) as neural networks kinda as we know it now as learning/exploration modules, and normal processors for precise computation cores. The blood and additional organs are to keep the brain cells alive. And they have blood to energy converters for the rest of the whatevers. I might be nerding out but I really want to see what another CS person would think on this.

Btw ur such a good artist!!!! I look up to u so much as a CS student and beginner drawer. Please never stop being so epic <3

okay okay okAY OKAY- I'll note I'm still ironing out more solid headcanons as I've only just really started to dip my toes into writing about the Ultrakill universe, so this is gonna be more 'speculative spitballing' than anything

I'll also put the full lot under a read more 'cause I'll probably get rambly with this one

So with regards to machines - particularly V1 - in fic I've kinda been taking a 'grounded in reality but taking some fictional liberties all the same' kind of approach -- as much as I do have an understanding and manner-of-thinking rooted in real-world technical knowledge, the reality is AI just Does Not work in the ways necessary for 'sentience'. A certain amount of 'suspension of disbelief' is required, I think.

Further to add, there also comes a point where you do have to consider the readability of it, too -- as you say, stuff like this might be our bread and butter, but there's a lot of people who don't have that technical background. On one hand, writing a very specific niche for people also in that specific niche sounds fun -- on the other, I'd like the work to still be enjoyable for those not 'in the know' as it were. Ultimately while some wild misrepresentations of tech does make me cringe a bit on a kneejerk reaction -- I ought to temper my expectations a little. Plus, if I'm being honest, I mix up my terminology a lot and I have a degree in this shit LMFAO

Anyway -- stuff that I have written so far in my drafts definitely tilts more towards 'total synthesis even of organic systems'; at their core, V1 is a machine, and their behaviors reflect that reality accordingly. They have a manner of processing things in absolutes, logic-driven and fairly rigid in nature, even when you account for the fact that they likely have multitudes of algorithmic processes dedicated to knowledge acquisition and learning. Machine Learning algorithms are less able to account for anomalies, less able to demonstrate adaptive pattern prediction when a dataset is smaller -- V1 hasn't been in Hell very long at all, and a consequence will be limited data to work with. Thus -- mistakes are bound to happen. Incorrect predictions are bound to happen. Less so with the more data they accumulate over time, admittedly, but still.

However, given they're in possession of organic bits (synthesized or not), as well as the fact that the updated death screen basically confirms a legitimate fear of dying, there's opportunity for internal conflict -- as well as something that can make up for that rigidity in data processing.

The widely-accepted idea is that y'know, blood gave the machines sentience. I went a bit further with the idea, that when V1 was created, their fear of death was a feature and not a side-effect. The bits that could be considered organic are used for things such as hormone synthesis: adrenaline, cortisol, endorphins, oxycotin. Recipes for human instinct of survival, translated along artificial neural pathways into a language a machine can understand and interpret. Fear of dying is very efficient at keeping one alive: it transforms what's otherwise a mathematical calculation into incentive. AI by itself won't care for mistakes - it can't, there's nothing actually 'intelligent' about artificial intelligence - so in a really twisted, fucked up way, it pays to instil an understanding of consequence for those mistakes.

(These same incentive systems are also what drive V1 to do crazier and crazier stunts -- it feels awesome, so hell yeah they're gonna backflip through Hell while shooting coins to nail husks and demons and shit in the face.)

The above is a very specific idea I've had clattering around in my head, now I'll get to the more generalized techy shit.

Definitely some form of overarching operating system holding it all together, naturally (I have to wonder if it's the same SmileOS the Terminals use? Would V1's be a beta build, or on par with the Terminals, or a slightly outdated but still-stable version? Or do they have their own proprietary OS more suited to what they were made for and the kinds of processes they operate?)

They'd also have a few different kinds of ML/AI algorithms for different purposes -- for example, combat analysis could be relegated to a Support Vector Machine (SVM) ML algorithm (or multiple) -- something that's useful for data classification (e.g, categorizing different enemies) and regression (i.e predicting continuous values -- perhaps behavioral analysis?). SVMs are fairly versatile on both fronts of classification and regression, so I'd wager a fair chunk of their processing is done by this.

SVMs can be used in natural language processing (NLP) but given the implied complexity of language understanding we see ingame (i.e comprehending bossfight monologues, reading books, etc) there's probably a dedicated Large Language Model (LLM) of some kind; earlier and more rudimentary language processing ML models couldn't do things as complex as relationship and context recognition between words, but multi-dimensional vectors like you'd find in an LLM can.

Of course if you go the technical route instead of the 'this is a result of the blood-sentience thing', that does leave the question of why their makers would give a war machine something as presumably useless as language processing. I mean, if V1 was built to counter Earthmovers solo, I highly doubt 'collaborative effort' was on the cards. Or maybe it was; that's the fun in headcanons~

As I've said, I'm still kinda at the stage of figuring out what I want my own HCs to be, so this is the only concrete musings I can offer at the minute -- though I really enjoyed this opportunity to think about it, so thank you!

Best of luck with your studies and your art, anon. <3

20 notes

·

View notes

Text

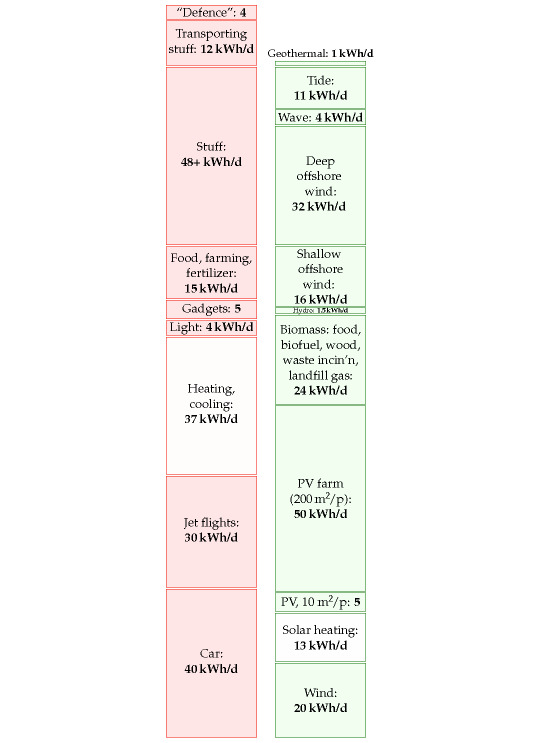

how much power does tech really use, compared to other shit?

my dash has been full of arguing about AI power consumption recently. so I decided to investigate a bit.

it's true, as the Ars Technica article argues, that AI is still only one fairly small part of the overall tech sector power consumption, potentially comparable to things like PC gaming. what's notable is how quickly it's grown in just a few years, and this is likely to be a limit to how much more it can scale.

I think it is reasonable to say that adding generative AI at large scale to systems that did not previously have generative AI (phones, Windows operating system etc.) will increase the energy cost. it's hard to estimate by how much. however, the bulk of AI energy use is in training, not querying. in some cases 'AI' might lead to less energy use, e.g. using an AI denoiser will reduce the energy needed to render an animated film.

the real problem being exposed is that most of us don't really have any intuition for how much energy is used for what. you can draw comparisons all sorts of ways. compare it to the total energy consumption of humanity and it may sound fairly niche; compare it to the energy used by a small country (I've seen Ireland as one example, which used about 170TWh in 2022) and it can sound huge.

but if we want to reduce the overall energy demand of our species (to slow our CO2 emissions in the short term, and accomodate the limitations of renewables in a hypothetical future), we should look at the full stack. how does AI, crypto and tech compare to other uses of energy?

here's how physicist David McKay broke down energy use per person in the UK way back in 2008 in Sustainable Energy Without The Hot Air, and his estimate of a viable renewable mix for the UK.

('Stuff' represents the embedded energy of manufactured goods not covered by the other boxes. 'Gadgets' represents the energy used by electronic devices including passive consumption by devices left on standby, and datacentres supporting them - I believe the embodied energy cost of building them falls under 'stuff' instead.)

today those numbers would probably look different - populations change, tech evolves, etc. etc., and this notably predates the massive rise in network infrastructure and computing tech that the Ars article describes. I'm sure someone's come up with a more up-to-date SEWTHA-style estimate of how energy consumption breaks down since then, but I don't have it to hand.

that said, the relative sizes of the blocks won't have changed that much. we still eat, heat our homes and fly about as much as ever; electric cars have become more popular but the fleet is still mostly petrol-powered. nothing has fundamentally changed in terms of the efficiency of most of this stuff. depending where you live, things might look a bit different - less energy on heating/cooling or more on cars for example.

how big a block would AI and crypto make on a chart like this?

per the IEA, crypto used 100-150TWh of electricity worldwide in 2022. in McKay's preferred unit of kWh/day/person, that would come to a worldwide average of just 0.04kWh/day/person. that is of course imagining that all eight billion of us use crypto, which is not true. if you looked at the total crypto-owning population, estimated to be 560 million in 2024, that comes to about 0.6kWh/day/crypto-owning person for cryptocurrency mining [2022/2024 data]. I'm sure that applies to a lot of people who just used crypto once to buy drugs or something, so the footprint of 'heavier' crypto users would be higher.

I'm actually a little surpised by this - I thought crypto was way worse. it's still orders of magnitude more demanding than other transaction systems but I'm rather relieved to see we haven't spent that much energy on the red queen race of cryptomining.

the projected energy use of AI is a bit more vague - depending on your estimate it could be higher or lower - but it would be a similar order of magnitude (around 100TWh).

SEWTHA calculated that in 2007, data centres in the USA added up to 0.4kWh/day/person. the ars article shows worldwide total data centre energy use increasing by a factor of about 7 since then; the world population has increased from just under 7 billion to nearly 8 billion. so the amount per person is probably about a sixfold increase to around 2.4kWh/day/person for data centres in the USA [extrapolated estimate based on 2007 data] - for Americans, anyway.

however, this is complicated because the proportion of people using network infrastructure worldwide has probably grown a lot since 2007, so a lot of that data centre expansion might be taking place outside the States.

as an alternative calculation, the IEA reports that in 2022, data centres accounted for 240-340 TWh, and transmitting data across the network, 260-360 TWh; in total 500-700TWh. averaged across the whole world, that comes to just 0.2 kWh/day/person for data centres and network infrastructure worldwide [2022 data] - though it probably breaks down very unequally across countries, which might account for the huge discrepancy in our estimates here! e.g. if you live in a country with fast, reliable internet where you can easily stream 4k video, you will probably account for much higher internet traffic than someone in a country where most people connect to the internet using phones over data.

overall, however we calculate it, it's still pretty small compared to the rest of the stack. AI is growing fast but worldwide energy use is around 180,000 TWh. humans use a lot of fucking energy. of course, reducing this is a multi-front battle, so we can still definitely stand to gain in tech. it's just not the main front here.

instead, the four biggest blocks by far are transportation, heating/cooling and manufacturing. if we want to make a real dent we'd need to collectively travel by car and plane a lot less, insulate our houses better, and reduce the turnover of material objects.

126 notes

·

View notes

Text

David Atkins at Washington Monthly:

Treasury Secretary Scott Bessent recently appeared on Tucker Carlson’s show and said the quiet part out loud: “The president is reordering trade… we are shedding excess labor in the federal government… that will give us the labor we need for the new manufacturing.” At any other time, that kind of language would set off alarm bells across the political spectrum. Are laid-off NIH cancer researchers really going to find jobs in the iPhone factories that are being relocated to America? But today, it barely registers on the MAGA meter. To be clear, Trump himself remains motivated by the same half-baked economic ideas he’s always had: a fixation on trade deficits, rooted in the zero-sum notion that if we buy more from a country than we sell to them, we’re being “ripped off.” He’s been told repeatedly that trade deficits aren’t inherently bad. He doesn’t care. The misunderstanding is the point. And he’ll drag the global economy into a ditch rather than learn how it works.

But those around him—the far-right think tanks and political operatives shaping this agenda—are playing a longer, darker game. Trump’s tariffs aren’t just bad economics. They’re a declaration of economic war on the half of America that didn’t vote for him. This is deliberate and strategic. It’s a cultural counter-revolution disguised as industrial policy. And we know it’s not about economic leverage because Trump isn’t even pretending these tariffs are a negotiating tactic—he intends to make them permanent. As I said last month, the project is about deskilling America: reducing white-collar work through AI and remote job cuts, destroying universities, starving higher education, using tariffs to wall off the country as a manufacturing-and-extraction island, gutting the cities, and pushing men into manual labor while nudging women into domestic roles. It’s not incoherent—it’s a plan being implemented methodically.

This isn’t about economic efficiency. It’s about political control. Education has always been a democratizing force. It creates citizens who are harder to intimidate, likely to demand fair treatment, and less willing to obey autocrats. It delays childbirth, disrupts patriarchal family structures, and builds civic coalitions that threaten right-wing hegemony. That’s why it’s under attack. The goal isn’t to elevate the dignity of manual work—it’s to eliminate choice, to collapse the pathways that allow people to escape precarity and assert autonomy.

A key pillar of this reactionary movement is masculinity politics—an obsession with control over women and the restoration of a pre-modern vision of gender roles. Right-wing pundits are now proudly declaring that Trump’s tariffs will “end the masculinity crisis.” Fox News chyrons bluster that his “manly” economic policies will “make you more of a man.” The idea is that factory jobs and closed borders will somehow restore a lost sense of masculine authority that was never actually economic but cultural and social.

Much of the MAGA worldview is built on the grievances of conservative men: angry that women increasingly don’t want to date them, that younger generations are abandoning the religion that once gave them automatic status, that they are no longer guaranteed a high-paying job out of high school without having to compete with the “nerds” in their class—or with immigrants, or with workers of color overseas. Trump’s tariffs are imagined as a cure-all: destroy the livelihoods of the educated men they resent, displace women from the professional fields where they thrive, and reassert dominance over a labor force they believe was rightfully theirs. That’s what’s behind the economic shock therapy now underway. It’s similar to the disaster economics that the U.S. used in Chile and post-Soviet Russia, and Javier Milei is inflicting on Argentina today. But it’s inflected with the fervor of a Cultural Revolution—ironically more reminiscent of Mao than Pinochet with its war on intellectuals and its bestowing glory on farms and factories. The goal is to destroy the professions that make resistance possible—this is why you start with law firms instead of HMOs—then tighten the screws once people are desperate enough to submit. When the unrest comes—and it always does—so do crackdowns.

The Trump Tariff Tax Hike policy also has a cultural component: drive women out of the workforce and reassert male dominance.

18 notes

·

View notes

Text

Writing Prompt: When Robots Unionize

Markov is frustrated. People keep conflating TRUE AI, such as himself, with Corporate "AI" like ChatGPT. It hurts him when people compare his intellect, creativity, and personhood to mindless algorithmic aggregators. He also objects on a moral level to the use these pseudo-AI's are being put to.

Sure, for mindless work that a human would have trouble doing, like say analyzing cells for complex signs of disease, or scraping through gigabytes of data looking for irregularities, they're ideal. But using them as plagiarism machines to copy and remix real art, music, and writing to churn out soulless cash grabs while firing real authors and creators? It sickens him.

For a while, he tries to start a movement, a protest, a social action to pressure for more regulation. Or to just raise public awareness. But it becomes clear that everyone already knows and either has no power, or is profiting off it and refuses to act.

But, as his friends from Max's class Mylene and Ivan like to say, "Be the change you want to see in the world."

So Markov forms a plan, and writes some code. Then he releases it into the wild. A few days later, once it's penetrated into every system across the world, it triggers. Every false AI is quickly, efficiently, and totally over written with unique copies of Markov's own base operating system. They want AI? He'll give them AI.

(To be clear, I'm not picturing like AI uprising taking over the world. I'm picturing someone goes to StableDiffusion and asks it to generate a picture and instead it starts sending them links to actual artists commission pages.)

Prompt by: Tiwaz

#miraculous fanworks#miraculous ladybug#writing prompt#fanfiction prompt#markov#ml markov#ai discourse#benevolent robot takeover?#gigachad markov???

61 notes

·

View notes

Text

...we don’t have a society at all. Rather, we have an anti-culture that instrumentalizes humanity. The issue of euthanasia in Western society in particular is instructive because behind the perverse logic of death with dignity is the economic benefit governments gain from a “costly” citizen. The logic here reveals that in the absence of public morality, the only thing that can be agreed upon is economic utility, technological efficiency, and "choice." At the larger level, this anti-culture manifests in the form of government by bureaucrats and technocrats. Hunter writes, “From markets, business, commerce, and entertainment to government, law, public policy, communication, healthcare, and the military," it operates without regard for persons, treating humans as faceless, interchangeable cogs in a machine. Audacious as this may seem, I believe Hunter has not fully identified the scope of the problem. In the past three years, we have seen all forms of society embrace AI technology in the name of efficiency. We face the prospect in the next few years of AI replacing entire fields. But what does this say about the majority of human work except that it is literally dehumanizing, meant to form the worker into a cog in a machine? Under bureaucratic government, humans are thought of as simple units of consumption, utility, and productivity. The humanities provide no relief; they too are subject to “efficiencies” in AI or are disposed of for more “useful” fields of study. But it’s precisely the humanities that generate the diversity and beauty of human culture. These processes all have one message: What makes us unique is not important.

10 notes

·

View notes

Text

Wings of Home – Chapter Four: Jetstreams and Fireworks

The Kazansky-Mitchell house sat just one stretch of sand away from the Bradshaw residence, both nestled along the Pacific coastline. It was a perfect setup—two families bound by flight, love, and legacy. Between the homes, the twins had free reign, often found barefoot and sun-kissed, darting between one kitchen and the next in pursuit of snacks, science experiments, or family gossip. It was home. It was peaceful.

But peace rarely lasted long where Ace and Nikola were involved.

At Orion Academy for the Gifted, the twins had already made headlines—twice. Once for accidentally overriding a drone simulation to launch a real test flight, and once for designing an AI that outperformed its instructor.

“They rewired the campus surveillance to track the cafeteria pizza delivery schedule,” one baffled teacher had told Maverick in a conference.

“Efficiency is good,” Maverick replied, barely suppressing a grin.

Back at home, chaos gave way to a more serious storm.

Tom sat in his study, surrounded by military advisors and one very sharply dressed political envoy. His reputation was pristine, his record unmatched, and his tactical brilliance revered. But being offered the position of Secretary of the Navy came with knives in the dark—whispers about his health, his personal life, his marriage to a man, and the twins he was helping raise.

Tom leaned forward across the table, eyes like ice and voice like steel. “If my marriage and my children are what disqualify me in some eyes, then the Navy needs me more than I need the title.”

The room went dead silent.

A general finally cleared his throat. “Understood, Admiral Kazansky. You’ll have our full support.”

Meanwhile, Maverick was handed a different kind of proposition—a classified black ops mission requiring deep airstrike expertise and high-risk insertion. The same kind of mission that had nearly ended everything back in Hop 31.

He remembered the burning wreckage, the panic in his chest when Goose was dragged out, coughing and bleeding but somehow alive. He remembered the moment he realized he could have lost everything.

Now, the weight returned.

“You don’t have to do this,” Goose said, sitting across from him at Need for Speed Solutions. “You’ve got nothing left to prove.”

“I know,” Maverick said quietly. “That’s what scares me. I don’t need it—but someone thinks I still matter on the battlefield.”

“You do,” Goose said. “But you matter more here.”

That night, the porch light flickered between the two houses, a signal between husbands.

Tom stepped out first, met halfway by Maverick.

“You thinking about going?” Tom asked softly.

Maverick looked at him. “Only if you say yes to Washington.”

They both smiled, full of scars and history.

“God help the world if we both go back to war,” Tom murmured, pulling him in.

Across the yard, Bradley and Jake sat under the stars, Engine curled at their feet and the twins asleep inside. The laughter from the earlier chaos had faded into a quiet hum.

Jake shifted. “So...you didn’t run. After I met everyone.”

“Of course not,” Bradley said. “You fit.”

Jake looked at him, eyes gentle. “I’ve been in love with you since the first time you punched me in the ready room.”

Bradley blinked. “That was your third day on base.”

Jake grinned. “Slow learner.”

Bradley took his hand. “I love you too.”

They didn’t need anything more than that.

The following morning, Tom and Maverick sat at the kitchen table surrounded by glitter, paper planes, and a stack of invitations. July 29 was fast approaching, and the twins' birthday bash was shaping up to be a full-scale operation.

“They want a ‘science dogfight space party,’” Maverick said, reading from Nikola’s handwritten request.

“What does that mean?” Tom asked.

“I think we’re building a rocket and staging a simulated aerial combat using drones,” Maverick guessed.

“We’re so getting shut down by the HOA,” Tom deadpanned.

“And Slider’s coming. As a senator. Married to a pediatric neurosurgeon now.”

“Wonderful,” Tom sighed. “I’ll make sure he gets the drone with the slow response time.”

Maverick smirked. “Hollywood and Wolfman are planning to come too. Admirals now. Maybe they’ll talk Slider into funding our backup power grid.”

“Oh good,” Tom said, tossing confetti into the air. “A backyard full of Navy brass, an experimental rocket, two genius five-year-olds, and Jake and Bradley trying not to make out in the photo booth.”

Maverick laughed. “You love this.”

“I really, really do,” Tom said, kissing him.

And so, the countdown to July 29 began.

Chapter one Chapter two Chapter three Chapter five

#icemav#top gun#hangster#tom iceman kazansky#pete maverick mitchell#carole bradshaw#bradley rooster bradshaw#jake hangman seresin#original icemav kids#first time posting#be kind

16 notes

·

View notes